We have developed a new theory on how flying drones and insects can estimate the gravity direction. Whereas drones typically use accelerometers to this end, the way in which flying insects do this is shrouded in mystery, since they lack a specific sense for acceleration. In an article published today in Nature, scientists from TU Delft, the Netherlands, and CNRS / Aix-Marseille University, France, have shown that drones can estimate the gravity direction by combining visual motion sensing with a model of how they move. This new approach is an important step for the creation of autonomous tiny drones, since it requires fewer sensors. Moreover, it forms a hypothesis for how insects control their attitude, as the theory forms a parsimonious explanation of multiple phenomena observed in biology.

The importance and difficulty of finding the gravity direction

Successful flight requires knowing the direction of gravity. As ground-bound animals, we humans typically have no trouble determining which way is down. However, this becomes more difficult when flying. Indeed, the passengers in an airplane are normally not aware of the plane being slightly tilted sideways in the air to make a wide circle. When humans started to take the skies, pilots relied purely on visually detecting the horizon line for determining the plane’s “attitude”, that is, its body orientation with respect to gravity. However, when flying through clouds the horizon line is no longer visible, which can lead to an increasingly wrong impression of what is up and down – with potentially disastrous consequences.

Also drones and flying insects need to control their attitude. Drones typically use accelerometers for determining the gravity direction. However, in flying insects no sensing organ for measuring accelerations has been found. Hence, for insects it is currently still a mystery how they estimate attitude, and some even question whether they estimate attitude at all.

Optic flow suffices for finding attitude

Although it is unknown how flying insects estimate and control their attitude, it is very well known that they visually observe motion by means of “optic flow”. Optic flow captures the relative motion between an observer and its environment. For example, when sitting in a train, trees close by seem to move very fast (have a large optic flow), while mountains in the distance seem to move very slowly (have a small optic flow).

“This research started with the question whether optic flow could convey any information on attitude.”, says Guido de Croon, Full Professor of Bio-inspired Micro Air Vehicles, “Intuitively, this could be the case. For instance, if a fly stops flapping its wings, gravity will make it accelerate downwards. The resulting downward motion can be picked up by means of optic flow. This can allow the fly to find the gravity direction and hence its attitude.”

Based on this initial intuition, the researchers performed a theoretical analysis, combining the physical formulas expressing optic flow measurements with a model of how an insect or drone will accelerate and rotate due to its own actions and gravity.

“We were surprised by the outcome of the theoretical analysis: It showed that when using a motion model, optic flow alone suffices for determining the gravity direction.”, adds Abhishek Chatterjee, who worked on the research as an MSc student at TU Delft, “This is surprising, because optic flow itself captures only rotation rates and not attitude angles. Moreover, we feared that if this approach worked, it would only be valid in specific conditions such as when a fly stops flapping its wings.”

In fact, the opposite is true. Finding the gravity direction with optic flow works almost under any condition, except for specific cases such as when the observer is completely still. “At first sight, this may still seem like quite a problem, since it implies that in the important condition of hovering flight the gravity direction cannot be found.” mentions Guido de Croon, “Normally, engineers would add extra sensors so that attitude can also be determined in hover flight. However, we hypothesize that nature has simply accepted this problem. In the article we provide a theoretical proof that despite this problem, an attitude controller will still work around hover at the cost of slight oscillations.”

Implicitaions for robotics

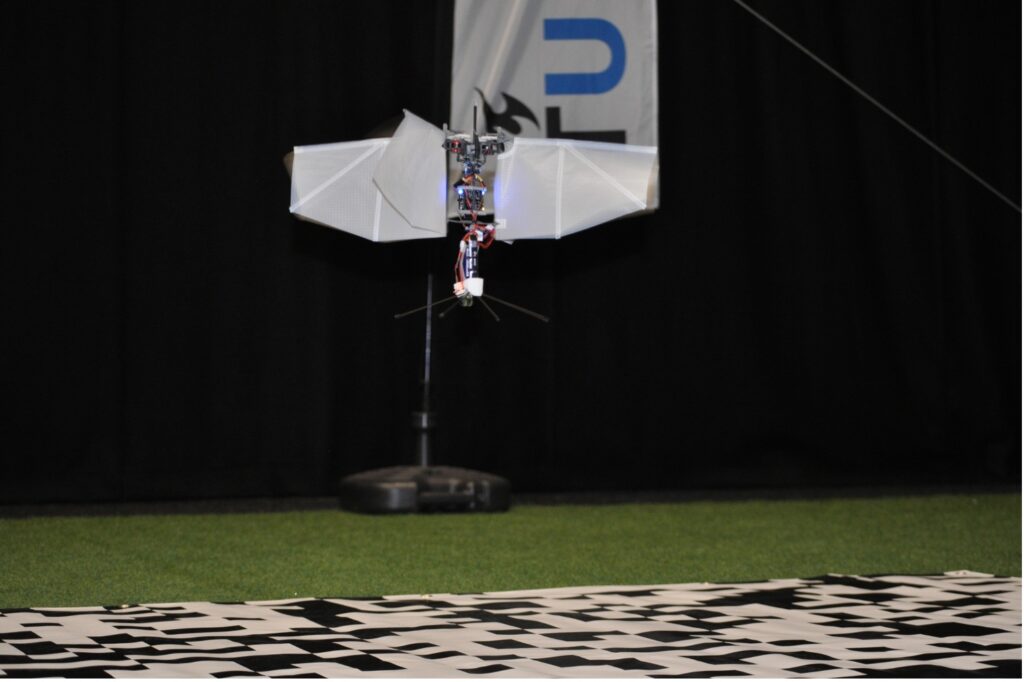

The researchers confirmed the theory’s validity with robotic implementations. Most experiments were performed with a quadrotor drone, which was able to fly completely by itself based on optic flow and gyros – not using the accelerometers. As predicted, when controlling attitude based on optic flow, the robot exhibited slight oscillations when hovering. Moreover, experiments were performed with a flapping wing drone equipped with an artificial insect compound eye. These experiments showed that the flapping motion improved the attitude estimation accuracy.

Flapping wing robot controlling its attitude with the proposed theory. It is equipped with an artificial insect compound eye, which can perceive optic flow at a high frequency. Photo by Christophe de Wagter, TU Delft.

Flapping wing robot controlling its attitude with the proposed theory. It is equipped with an artificial insect compound eye, which can perceive optic flow at a high frequency. Photo by Christophe de Wagter, TU Delf

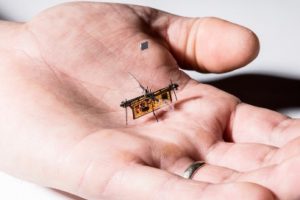

The proposed theory is promising for the field of robotics. “Various research groups strive to design autonomous, insect-sized flying robots.”, says Guido de Croon, “Tiny flapping wing drones can be useful for tasks like search-and-rescue or pollination. Designing such drones means dealing with a major challenge that nature also had to face; how to achieve a fully autonomous system subject to extreme payload restrictions. This makes even tiny accelerometers a considerable burden. Our proposed theory will contribute to the design of tiny drones by allowing for a smaller sensor suite.

Biological insights

The proposed theory also has the potential to give insight into various biological phenomena. “It was known that optic flow played a role in attitude control, but until now the precise mechanism for this was unclear.”, explains Franck Ruffier, bio-roboticist and director of research at CNRS / Aixe-Marseille University, “The proposed theory can explain how flying insects succeed in estimating and controlling their attitude even in difficult, cluttered environments where the horizon line is not visible. It also provides insight into other phenomena, for example, why locusts fly less well when their ocelli (eyes on the top of their heads) are occluded with paint.”

Honeybee flying in a tapered tunnel. The narrowing tunnel leads to lower flight speeds – and as the researchers noticed – more oscillations of the honeybee body angles. Photo by DGA / François Vrignaud.

Now, the attention will turn to verify that insects indeed use the proposed mechanism for attitude control. The challenge here is that the theory concerns neural processes that are hard to monitor in flight on flying insects. “One avenue that can be taken is to study oscillations of the insects’ body and heads.”, adds Franck Ruffier, “For the article we re-analyzed biological honeybee data from one of our previous research studies. We found that honeybee bodies’ attitude angles had less variation at higher flight speeds. Although in accordance with the proposed theory, this could also be explained by aerodynamic effects. We expect that novel experiments, specifically designed for testing our theory will be necessary for verifying the use of the proposed mechanism in insects.”

Whatever the outcome, the article shows how the synergy between robotics and biology can lead to technological advances and novel avenues for biological research.

Article:

“Accommodating unobservability to control flight attitude with optic flow”, Nature, G.C.H.E. de Croon, J.J.G. Dupeyroux, C. De Wagter, A. Chatterjee, D.A. Olejnik, and F. Ruffier.

DOI: https://doi.org/10.1038/s41586-022-05182-2

URL: https://www.nature.com/articles/s41586-022-05182-2

Video: https://youtu.be/ugY0RTMjH1s

Additional photos and videos:

https://surfdrive.surf.nl/files/index.php/s/ncgesB1ltRVcn8C

Part of this project was funded by the Dutch Science Foundation (NWO) under grant number 15039.